Building applications with Large Language Models (LLMs) in early 2024 often feels like being part of a discovery expedition. There's plenty of advice, but it’s hard to tell what’s genuinely useful and what isn’t.

I’m Ena, the creator behind Trash Tutor, an app that simplifies waste sorting through AI technology. One of the great things about personal projects like this is discovering things you thought you understood but didn't.

I had many moments like this. There was one standout "face palm" moment while working out Trash Tutor's prompt that helped me clarify my understanding of LLMs and made me rethink some of the advice I'd been taking.

Let me share this story with you!

Introducing Trash Tutor

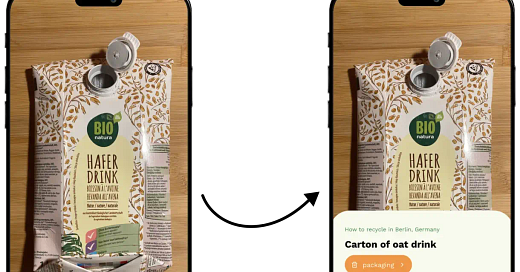

The idea behind Trash Tutor is simple: Take a photo of the item you want to toss, share your location, and it will determine the right waste category based on local guidelines.

Each category comes with a description that provides context to help Trash Tutor classifying. For example, I defined the "packaging" category in Germany as:

Plastic and metal objects like PET bottles, household foil, scrap metal (e.g. cutlery), and metal cans (drinks)

When there’s a clear match between an item and a category, like a beverage carton with the packaging category, even in the early development stages, the app consistently delivered the correct decision.

I had instructed the model to respond in a fixed JSON format:

Always return only a single JSON object {} in one of the following output formats:

1. If successful: {"status": "success", "data": {"object": "_OBJECT NAME_", "category": "_CATEGORY NAME_"}}

2. If the object on the image can't be identified: {"status": "error", "code": "unidentifiable_object"}

3. If you identify the object but still can't assign a matching waste category: {"status": "error", "code": "unidentifiable_category", "data": {"object": "_OBJECT NAME_"}}

[...]This is very useful and a common practice as it organizes the unpredictable LLM output into a clear, manageable format. In case of the carton of oat drink, it would return this:

{

status: "success",

data: { object: "carton of oat drink", category: "packaging" }

} Unlike unstructured text, the consistent structure allows for efficient access to information. It simplifies processing the output and integrating it into the application to make sure it will end up in the nice result screen shown above.

Identifying the Challenge

For cases when the right category isn't as straightforward tough, I noticed I needed to give the model a bit more instruction.

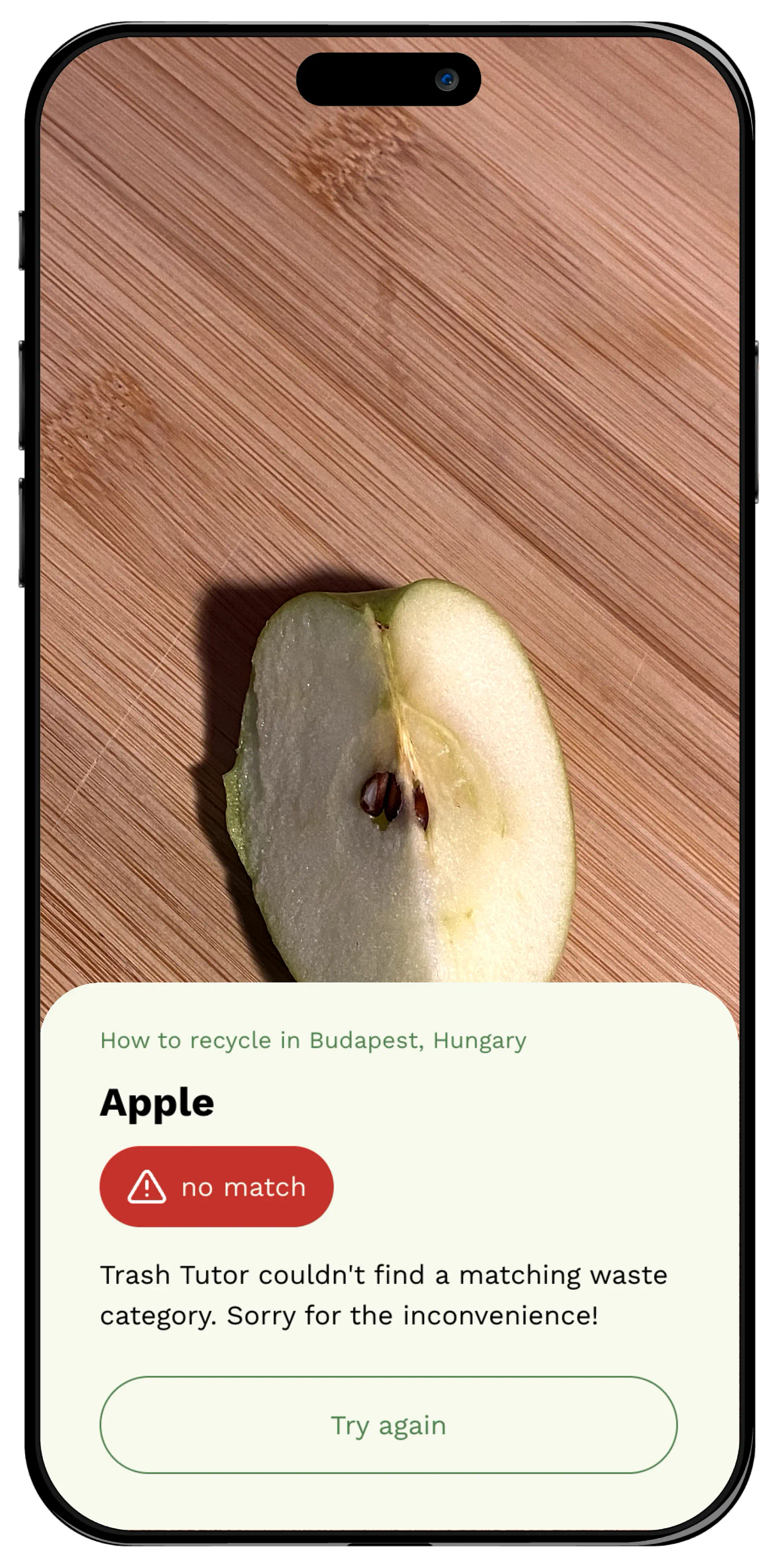

Take an apple, for instance. Normally, it belongs in organic waste. But what if your local waste categories don't include a designated bin for organic materials? In such cases, throwing it in the regular "household" trash is probably the next best choice.

Handling these more ambiguous situations requires logical thinking to choose the best available option. This type of reasoning and decision-making isn't a strong point for most LLMs without additional guidance.

However, there are several strategies to improve your prompts and get better responses from LLMs in such cases. A method that I found useful for my scenario is the Chain of Thought (CoT) technique. By breaking down our reasoning into steps, we can help the LLM follow a human-like thought process.

Here are the steps I came up with:

Step 1 - Identify the object in focus on the image and the material it is made of. Really look closely!

Step 2 - Try to assign the object to the most matching waste category available in the given set of waste categories.

Step 3 - If there is no direct matching waste category and the object does not contain hazardous material, use the 'household' category if available as a fallback.

Remember, correct categorization is crucial for waste management, but when in doubt, it is better to opt for a broader category such as 'household'.Let's revisit the example of the apple. What do you think the model chose when there's no organic option - with the new instructions? Did it pick the next best choice, the "household" category?

Trial and Error

Unfortunately, despite my detailed instructions, the model still failed to come to the right conclusion:

{

status: "error",

code: "unidentifiable_category",

data: { object: "apple" }

}Even more troubling, this wasn’t isolated to apples. By then, I had gathered a group of initial users. While they liked the app's user interface, many pointed out that on average every third picture resulted in an error. Clearly, this was far from satisfactory!

To address this systematically, I built a system, with user consent, to collect and analyze these error cases. I created a test suite with various images and used Node.js scripts to programmatically test them.

The conclusion was clear: there was a problem with my prompt. However, even after I added more detail and tried advanced prompting techniques like few-shot CoT, prompt chaining, and using emotional triggers, the improvements were minimal.

I noticed I was going down a wrong path. But what had I done wrong?

The breakthrough

After a while, I realized that the real issue wasn't that the model couldn’t figure out the right category—it was how I had set it to respond.

Opting for a simple JSON output made sense from a user interface and cost perspective, as the output was succinct and cheaper than generating lengthy text responses.

However, this approach had a significant drawback. To understand why, let's look at how a LLM works: it generates text by using patterns it has learned from vast amounts of human-generated text data. It predicts subsequent words and forms responses based on statistical probabilities rather than understanding.

Here's the crucial part: Telling the model how to “think” step by step will render useless if you limit it to spit out only the final answer.

“Thinking” in case of an LLM means actually writing it out. Unlike humans, they can't properly track their own reasoning process without it. At least as of April 2024, the time I am writing this post.

This seems to be a common misconception as I found an explicit note in the Anthropics docs on this when writing this post:

It's important to note that thinking cannot happen without output! Claude must output its thinking in order to actually "think."

When I read this, I thought “I wish I had come across this earlier!”. It took various prompt optimizations before I figured it out on my own. That said, without the struggle, I might have skimmed over it without giving it much thought.

The final solution

Once I realized what I did wrong, the solution was obvious. Just let it "think out loud".

There are multiple ways to achieve this while ensuring the output to be still parsable, e.g. "inner monologue". It means to semantically separate the thought process from the final answer. For example, by placing it inside XML tags such as <thinking> and <answer>, as Anthropic suggests for its models.

I chose to split the reasoning and JSON parsing into two distinct requests.

On one hand, I noticed the simpler the prompt, the better were the results. I like Anthropic’s explanation:

You can think of working with large language models like juggling. The more tasks you have Claude handle in a single prompt, the more liable it is to drop something or perform any single task less well.

On the other hand, I wanted to take advantage of "function calling", a way for models to respond in a structured JSON format, but more programmatically than JSON mode.

Rather than writing the desired JSON format in system or user prompts, you can describe functions, their purpose and precise parameters in a JSON schema, and instruct the model to intelligently choose one or more of them for its response. It will create a JSON object containing the arguments for the chosen function so that you can invoke it within your own programming.

This allowed me to achieve a more flexible and reliable output format. However, the gpt-4-vision-preview model I required for image recognition didn't support function calling at that time. Thus, I chose gpt-4-1106-preview, a model specifically trained for it.

Here is the final simplified prompt:

You are an expert assisting the user with domestic waste sorting.

The user's location is _LOCATION_.

The following waste categories are available: _CATEGORIES_

The user uploads a photo of an item and your task is to figure out what exactly the item is made of and select which would be the best waste category to dispose of it.

In case multiple items are visible on the photo, please choose the biggest one or the most likely one to be thrown into a bin.

If there is no directly matching category for the item, please select "household", as long as the item is not hazardous and not bulky.

Do not categorise any living being or part of it.

First, include a short reasoning about why this item needs to be disposed of this way.

Finally, finish your answer with: CATEGORY and the name of the waste category.Combined with the parsing request, I was finally happy with the results:

You can find the full prompts and complete source code of Trash Tutor on GitHub.

To wrap up

My takeaway from this experience is, there isn't a one-size-fits-all solution.

Understanding the basics of how LLMs operate is crucial. Mixing all kind of prompting techniques in an overly detailed prompt doesn't guarantee success. Contrary to some advice I'd received, simple concise prompts can be more powerful. A real eye-opener for me was seeing the minimal prompt used by Anthropic for Claude 3.

My key to sucess was figuring out a systematic way to collect real user feedback to creatively experiment with various solutions until I found what works. It turned out to be much more effective than just following textbook methods.